How to Measure FLOP/s for Neural Networks Empirically? – Epoch

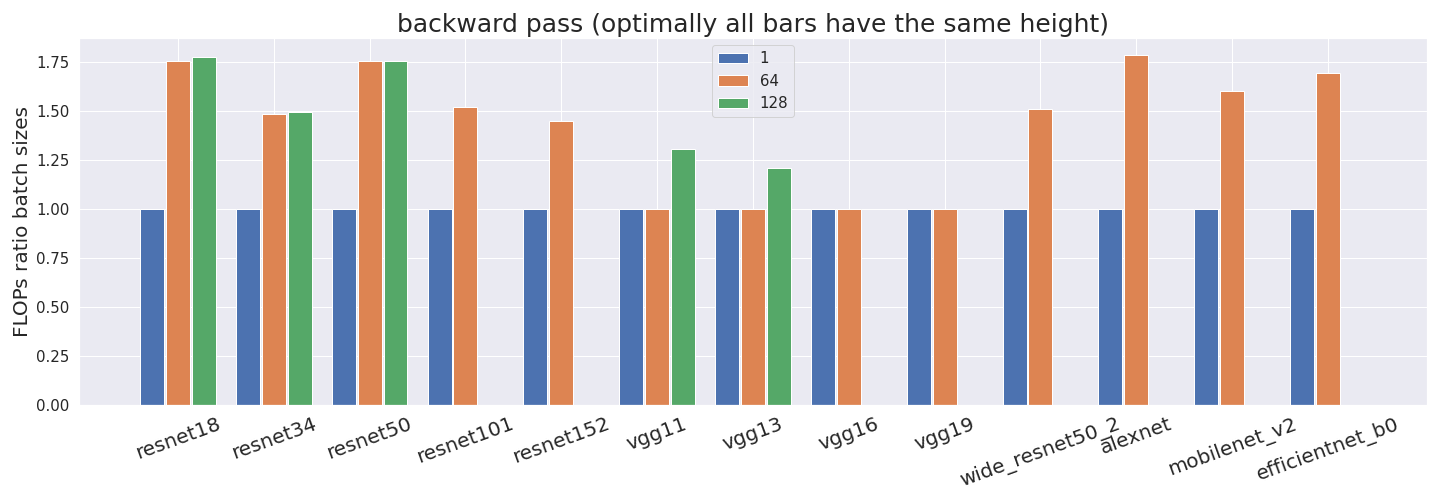

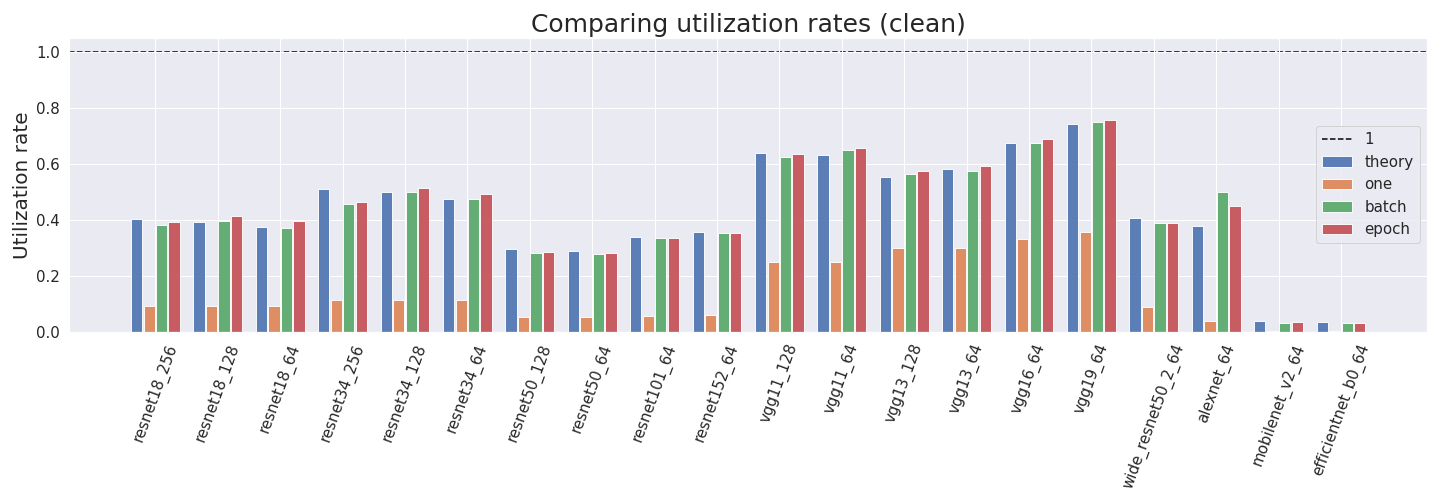

Computing the utilization rate for multiple Neural Network architectures.

Efficient Inference in Deep Learning - Where is the Problem? - Deci

The base learning rate of Batch 256 is 0.2 with poly policy (power=2).

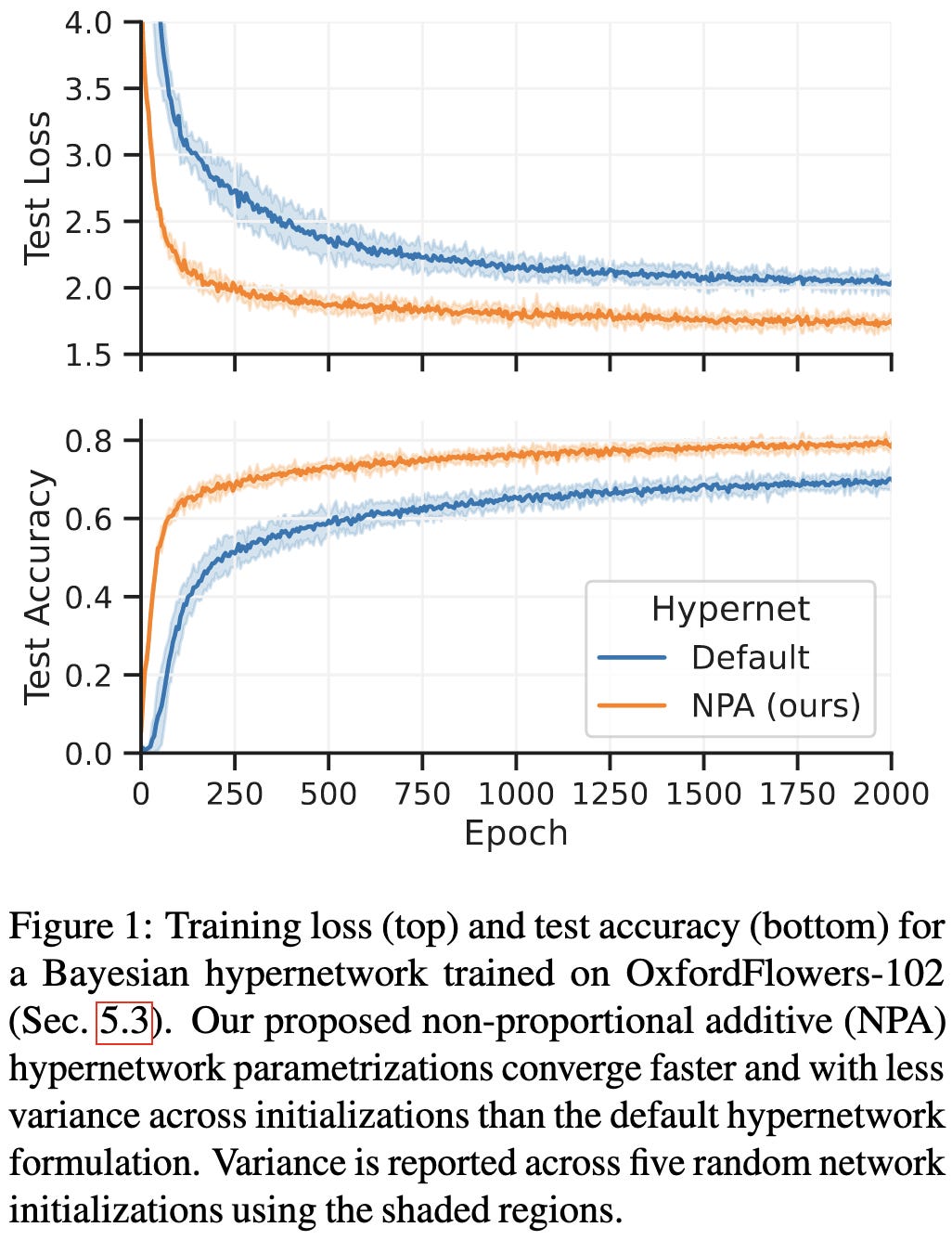

2023-4-23 arXiv roundup: Adam instability, better hypernetworks, More Branch-Train-Merge

CoAxNN: Optimizing on-device deep learning with conditional approximate neural networks - ScienceDirect

Missing well-log reconstruction using a sequence self-attention deep-learning framework

ICLR 2021

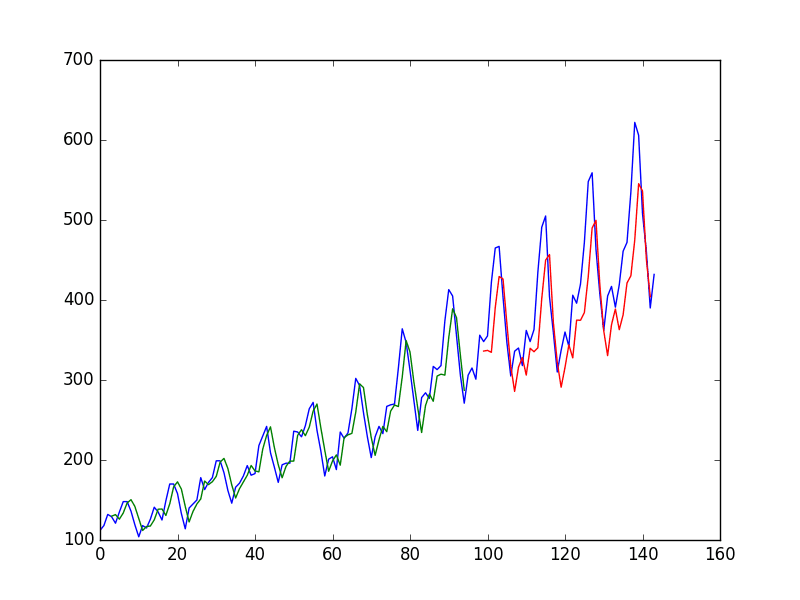

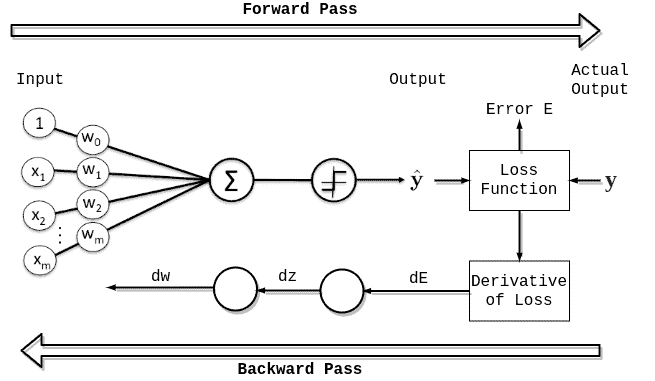

Time Series Prediction with LSTM Recurrent Neural Networks in Python with Keras

Sensors, Free Full-Text

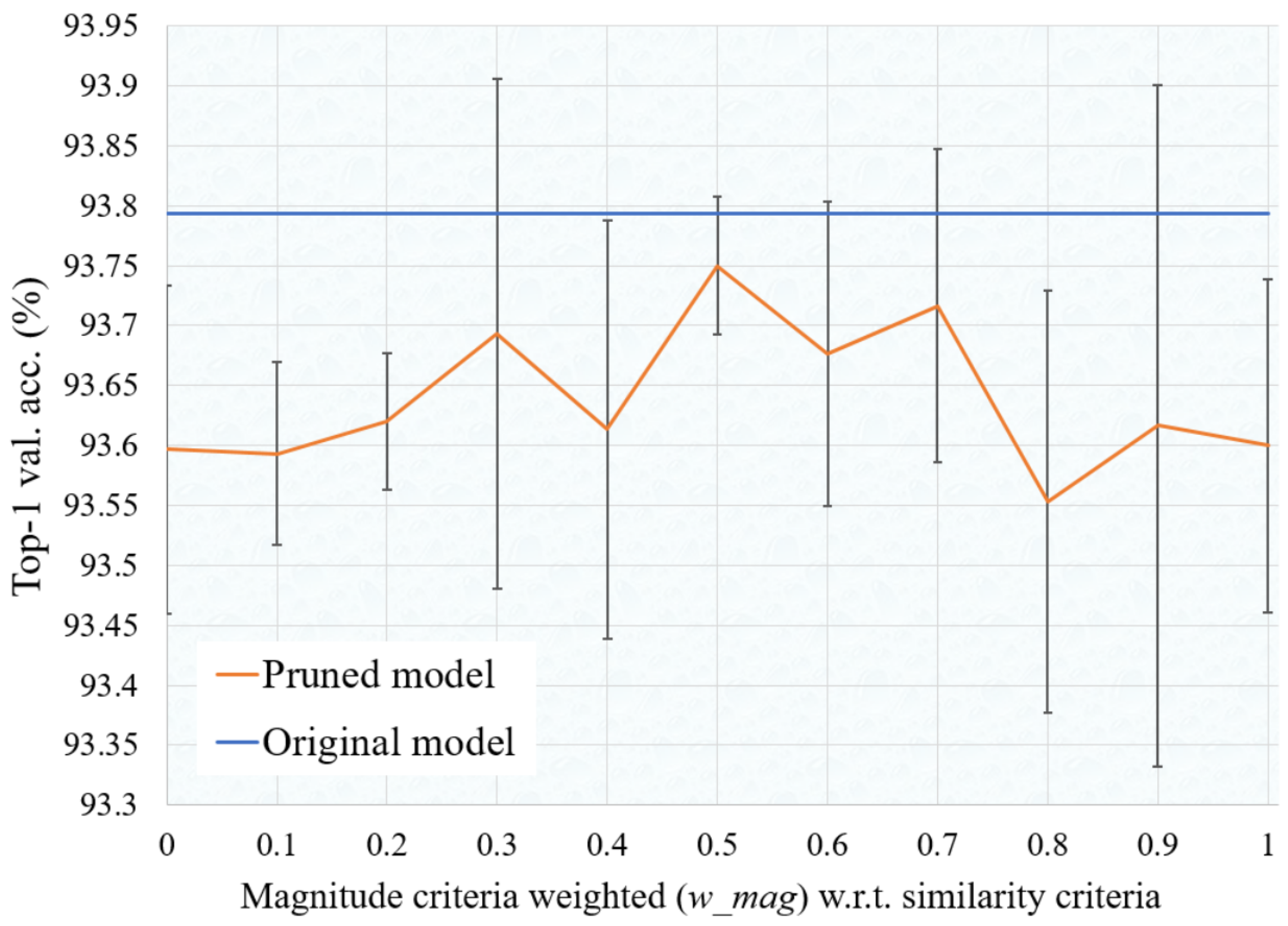

Applied Sciences, Free Full-Text

How to Measure FLOP/s for Neural Networks Empirically? – Epoch

Epoch in Neural Networks Baeldung on Computer Science

FLOPS Calculation [D] : r/MachineLearning

SiaLog: detecting anomalies in software execution logs using the siamese network